6 lessons from Cloudflare's June 2022 outage

On June 21, 2022, the US-based global content delivery network (CDN) provider and security company Cloudflare suffered an outage at 6:27 UTC that lasted until 7:42. The outage was caused by a network configuration error that affected 19 of Cloudflare's data center locations — Amsterdam, Atlanta, Ashburn, Chicago, Frankfurt, London, Los Angeles, Madrid, Manchester, Miami, Milan, Mumbai, Newark, Osaka, São Paulo, San Jose, Singapore, Sydney, Tokyo. The outage had a worldwide impact, disabling access to many apps, like Discord, for users served from these data centers.

Background

Cloudflare is a major CDN that provides businesses with fast and reliable delivery of content on their websites by employing a mesh of edge servers connected through spine servers to deliver data. To be reachable to users via the Internet, Cloudflare uses border gateway protocol (BGP), the routing protocol of the internet.

Cloudflare enables global access to a large part of the internet, maintaining its own routes for BGP. BGP is the routing protocol of the internet, also called the post office network of the internet. BGP enables a logical way for systems to find the easiest route to transfer data. The way BGP propagates is determined by BGP advertising policies that are set by operators. These policies are evaluated periodically, and Cloudflare contributes to maintaining them.

As a part of its ongoing efforts to upgrade its existing services, Cloudflare implemented a new architectural change on June 21 across its 19 data centers that were affected. A spine layer of servers was being implemented to improve the mesh of edge servers, designed to help make Cloudflare's content delivery infrastructure more resilient and flexible.

While running the code to upgrade, a network configuration code mistake affected the sequence of BGP advertisements, causing Cloudflare's IP addresses to be withdrawn from the Internet. To complicate the situation further, this error also cut off Cloudflare engineers from connecting with the affected data centers to revert the problematic change.

The misconfiguration in the diff format reordered the terms in the BGP prefix, stopping the advertising itself, removing access to impacted locations, and preventing servers from reaching origin servers. Cloudflare’s internal load balancer also failed and overloaded smaller clusters, amplifying the problems.

Despite these challenges, Cloudflare got to the root cause quickly, communicated the status of recovery effectively, and executed a backup procedure to restore the services in the next hour.

The impact

- The outage significantly impacted internet access for many apps that depend on Cloudflare for the delivery of content to users worldwide, including Discord, Shopify, Fitbit, and FTX.

- A 502 bad gateway error (the server you are accessing is not connecting to other servers to enable access) showed up for users. Interestingly, many downtime detectors were also down, since they relied on Cloudflare.

- Though 5% of the Cloudflare network was hit, the outage had a disproportionate impact, affecting 50% of total requests, delaying or denying website access to millions of users worldwide.

Learnings

1: Cloudflare lists these learnings from the incident:

- Address procedural gaps to catch errors early on.

- Redesign the architecture to rule out unintentional errors at the design level.

- Explore automation opportunities to improve and implement staggered rollouts for critical updates such as network configuration changes.

- Implement an automated commit-confirm rollback workflow to instantly revert to the earlier working state when an outage occurs to reduce repair time and lower the impact on business.

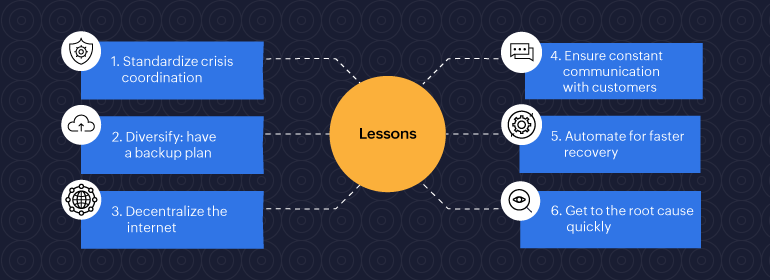

2: Standardize crisis coordination: Cloudflare stated in its post-incident blog that the return to normal was delayed as network engineers "walked over each other’s changes, reverting the previous reverts, causing the problem to reappear sporadically." This calls for robust, standard operating procedures to lay clear rules on access, control, and escalation with constant communication.

3: Get to the root cause quickly: Drill down on the root cause quickly. Having a thorough maintenance team helps with staying on top of errors to drill down on the root cause and mend the issue faster.

4: Call for a decentralized internet: There is widespread concern that the internet has become more and more centralized with a handful of cloud companies and infrastructure providers being responsible for connecting the globe. Many recent incidents like the Fastly outage in June of 2021, the AWS outage in June of 2022, and the Facebook outage in October of 2021 had a significant impact on internet users around the globe, sparking conversations on the need to decentralize the internet.

5: Diversify and build resilience: Companies that run critical services may explore ways to mitigate dependencies on a single vendor, diversify, and include a robust backup plan to deliver their business services without disruptions. During CDN downtime, companies can enable CDN-independent access for websites, enabling slow, albeit functioning, connections during the repair period.

6: Ensure constant communication with customers: Leverage rich status communication pages that inform users of how websites are functioning with explanations on the recovery path. Site24x7's StatusIQ enables transparent and instant communication through independently hosted status communication pages with options to customize it to fit each brand's language. This reduces anxiety during downtime, drives down the number of support tickets, builds trust, and protects brand reputation.

Cloudflare's recent outage is an opportunity for IT departments to reevaluate the importance of robust infrastructural changes and the need to ensure a restoration plan for critical parts of the internet that millions of users depend on.