Identify and eliminate bottlenecks in your application for optimized performance.

Best Practices for Scaling Node.js Apps: The Best Solution in Hand

The scalability of an application refers to its ability to handle workloads at extremes, especially when demand is high. If the application has a steady resource demand growth, it should scale and create room for the expanded incoming traffic. Correspondingly, when traffic declines, the application should scale down and free up resources that are not needed.

This article will showcase some of the best practices and solutions for scaling Node.js apps and improving their stability.

Scaling Your Node.js Applications

When scaling Node.js applications, there are a few possibilities, with one being remarkably straightforward - additional machines to distribute the workload, also known as “horizontal scaling.”

Using horizontally scalable architectures can enhance performance, simplify scaling, and impose certain limitations. For instance, a common challenge when horizontally scaling is ensuring that even load distribution. When the load is unevenly distributed, it can lead to sub-optimal performance, such as unpredictable response times.

The idea behind vertical scaling is to increase the CPU, memory, and/or instance size of a single server. Due to rising costs, this solution might be feasible for only some organizations, so horizontal scaling is preferred.

While the problem of scaling your applications may sound intimidating at first glance, Node.js offers several tools that can help lower the cost of scaling. One approach is to have Node.js make configuration changes automatically. Also, Node.js offers development features like short-running processes (pods/tasks).

There are generally two ways of handling more workload - inject more resources into an individual processor, or spread your application across multiple systems or networks.

The challenge with Node.js is that it is single-threaded. By default, it uses a single core of a processor, even though the computer may have multiple cores. While simple applications that don't get much traffic can handle the application within that single core, it doesn’t exploit the full processing power of your computer. Commonly cloud computing instances come with more than a single core.

Node.js applications can achieve horizontal scaling through multiple servers, a cluster of multi-core computers, or Kubernetes - a container orchestration platform. By adding more servers or allowing Kubernetes to deploy and manage multiple instances automatically, the application can seamlessly scale to accommodate increased traffic, providing high availability and scalability.

Running Multiple Processes on the Same Machine

Node.js is single-threaded by default. However, there is a built-in “native cluster mode” which can be used to spread the load across multiple threads. Or, you can decide to implement “PM2 cluster mode”.

Native cluster mode

One way of achieving horizontal scaling is to create a cluster and scale your application across the available CPUs. Figure 1 illustrates the difference between a default Node.js single thread and a clustered Node.js app at work in a multi-core computer.

Having multiple Node instances means having multiple main threads. So, if one of the threads crashes or is overloaded, the rest can handle incoming requests.

Node.js includes a built-in cluster module that allows execution on multiple processor cores rather than the default single-thread execution. Adding this native module needs just a few lines of code and will automatically duplicate the application processes across multiple cores.

The module allows you to scale your application by creating worker processes (child processes). With this approach, the application runs its primary process at launch and spawns new worker processes as additional application requests come in.

Worker processes listen for requests on a single port, through which all requests are routed. The Node.js cluster module runs an embedded load balancer to distribute requests among the available worker processes, making the native module ideal for handling a more significant number of requests.

Implementing Native Cluster Mode

First, build a simple Node.js server that processes some heavy requests without using clustering. This example executes a default, single-thread Node.js server. Save the following lines of code to a file named index.js:

const express = require("express")

const app = express();

app.get("/heavytask", (req, res) =>{

let counter = 0;

while (counter<9000000000){

counter ++;

}

res.end(`${counter} Iteration request completed`)

})

app.get("/ligttask", (req, res) =>{

res.send("A simple HTTP request")

})

app.listen(3000, () => console.log("App listening on port 3000"))

Now run the application by executing node index.js.

Note that the first request iterates over a large number and will take considerably longer to run. In contrast, the second request returns a simple request. You can test both endpoints using Postman.

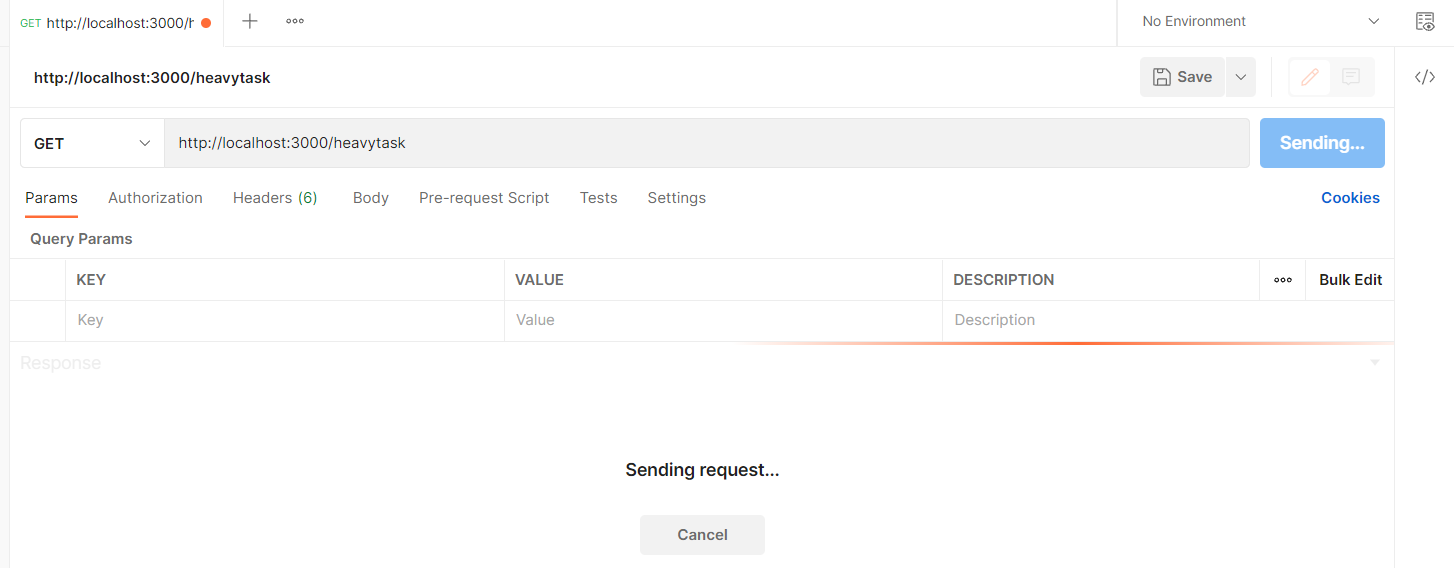

Sending a request to http://localhost:3000/heavytask blocks the application execution thread (Figure 1).

Fig. 1: The main thread is suspended, waiting for the request to be processed.

Fig. 1: The main thread is suspended, waiting for the request to be processed.

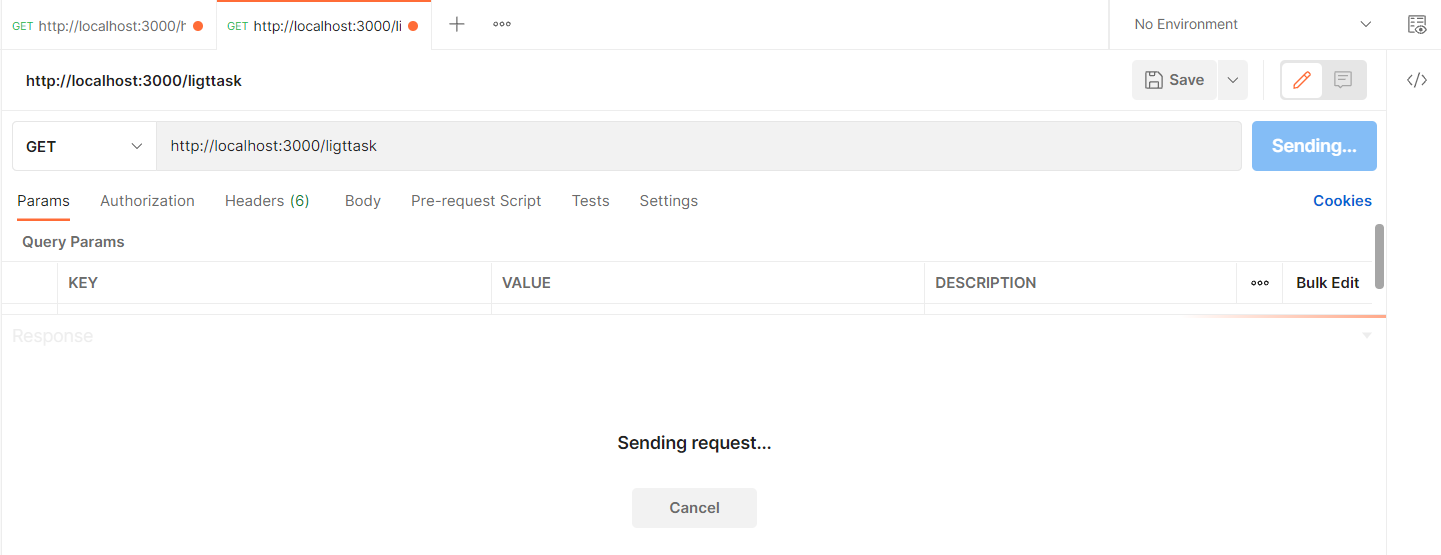

Now, sending a subsequent request to http://localhost:3000/ligttask won't return the expected response until the server finishes processing the first task and releases the CPU that handles both requests.

Fig. 2: Both requests are now waiting to be processed.

Fig. 2: Both requests are now waiting to be processed.

To solve this problem, modify your index.js file to use the cluster module as follows:

const express = require("express")

const cluster = require("cluster");

const os = require('os')

// check if the process is the master process

if(cluster.isMaster){

// get the number of available CPU cores

const CPUs = os.cpus().length;

// fork worker processes for each available CPU core

for(let i = 0; i< CPUs; i++){

cluster.fork()

}

// The of the number of cores

console.log(`Available CPUs: ${CPUs}`)

cluster.on("online",(worker, code, signal) => {

console.log(`worker ${worker.process.pid} is online`);

});

}else{

const app = express();

// if the process is a worker process, listen for requests

app.get("/heavytask", (req, res) =>{

let counter = 0;

while (counter<9000000000){

counter ++;

} // Log the core that will execute this request

process.send(`Heavy request ${process.pid}`)

res.end(`${counter} Iteration request completed`)

})

app.get("/ligttask", (req, res) =>{

// Log the core that will execute this request

process.send(`Light request ${process.pid}`)

res.send("A simple HTTP request")

})

app.listen(3000, () => {

console.log(`worker process ${process.pid} is listening on port 3000`);

});

}

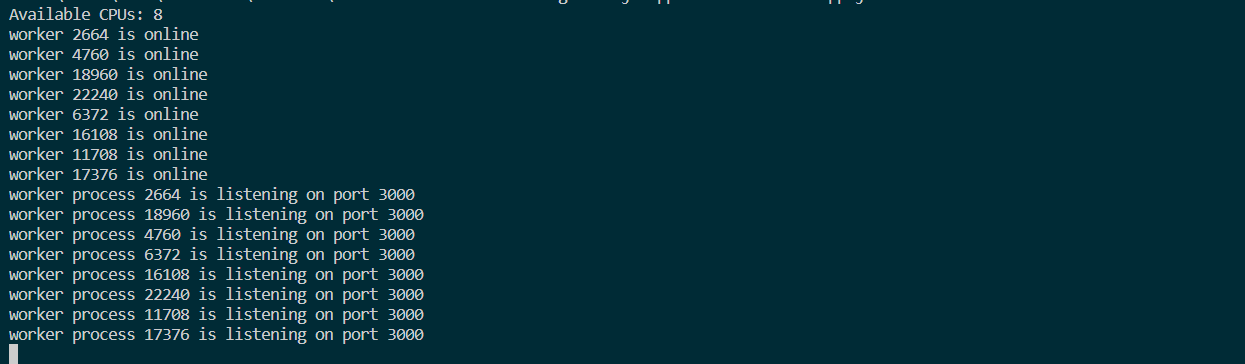

Now spin up the application again with node index.js. When testing the endpoint again, the light task will be executed immediately, and the heavy task will be handled by the core that has been assigned to it. The output in your terminal should be similar to the output shown in Figure 3.

Fig. 3: Multiple cores handle multiple requests.

Fig. 3: Multiple cores handle multiple requests.

The PC used to write this post has 8 cores. They’re all mapped to the same port and ready to listen for connections. Each core runs Google’s V8 engine.

You can measure the benefits of using available cores. Assuming you have an application that should handle 10,000 requests from 100 users in production. Using the previous example, as an illustration, you can spawn the request to all available cores.

Rather than using the previous example, let’s replace it with a simple Node.js application:

const express = require("express")

const app = express();

app.get("/", (req, res) =>{

res.send("A simple HTTP request")

})

app.listen(3000, () => console.log("App listening on port 3000"))

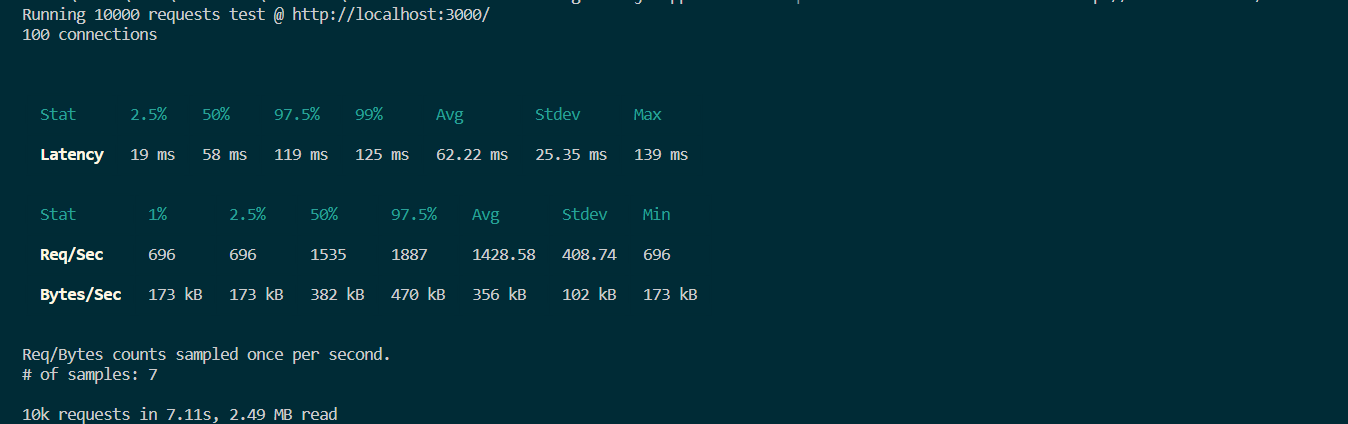

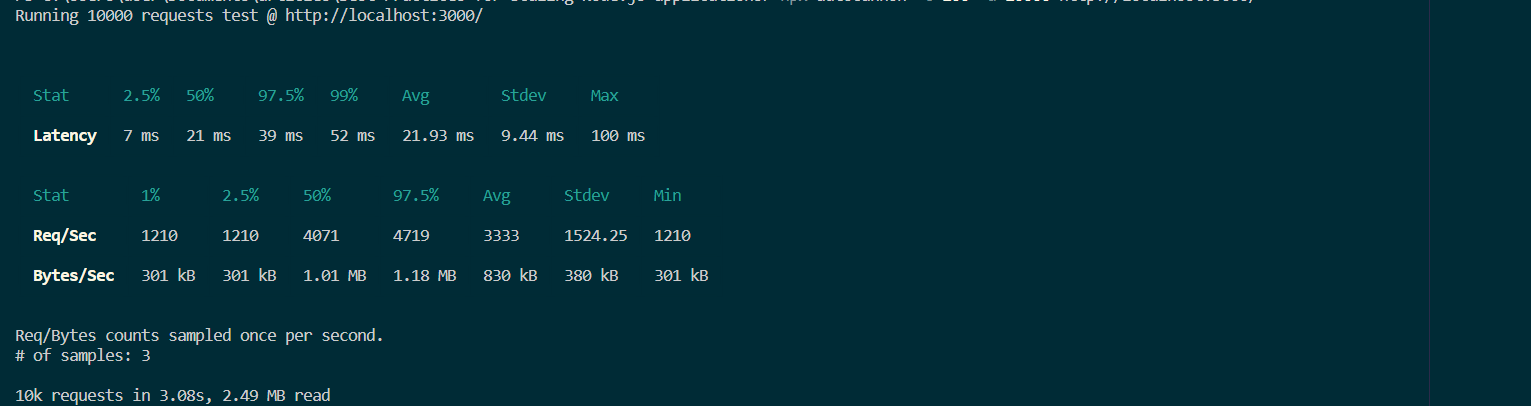

Boot up the application once again with node index.js. Then, run the following command to simulate a high workload with Autocannon. A sample run appears in Figure 4.

$ npx autocannon -c 100 -a 10000 http://localhost:3000/

Fig. 4: Output from a sample single-core run.

Fig. 4: Output from a sample single-core run.

It took about 7 seconds to execute 10,000 requests for this simple Node.js application. Let’s see whether there is any change when you use clustering on a 4-core computer.

The corresponding application uses the Node.js Cluster module follows. Figure 5 shows the output.

const express = require("express")

const cluster = require("cluster");

const os = require('os')

// check if the process is the master process

if(cluster.isMaster){

// get the number of available CPU cores

const CPUs = os.cpus().length;

// fork worker processes for each available CPU cores

for(let i = 0; i< CPUs; i++){

cluster.fork()

}

}else{

const app = express();

// if the process is a worker process listen for requests

app.get("/", (req, res) =>{

// Log the core that will execute this request

res.send("A simple HTTP request")

})

app.listen(3000, () => {

console.log(`worker process ${process.pid} is listening on port 3000`);

});

}

Fig. 5: Output from a sample multi-core run.

Fig. 5: Output from a sample multi-core run.

The example shows how 10,000 requests are handled in just 3 seconds. Native cluster mode can be configured in various ways, and getting started is simple and can provide quick performance gains.

PM2 Cluster Mode

Node.js also offers another way to achieve clustering, namely PM2. PM2 is a Node.js process manager that lets you work with zero-downtime clusters.

Native clusters certainly have benefits, but they require you to explicitly create and manage worker processes. Firstly, you must determine the number of available cores and the number of workers you should spawn.

The example in the previous section added a small but significant amount of code to manage the clustering within that server. In production, you must manually write and manage the cluster of every Node.js application you have deployed. This heavily increases the code complexity you need to utilize the available CPU cores effectively.

PM2 is a process manager that executes Node.js applications automatically in cluster mode. In addition to spawning workers for you, PM2 handles all the processes you would otherwise have to implement manually with the native cluster module.

Choosing between Native Cluster and PM2

Clustering should likely be your first step toward scaling a Node.js application. You might want to go with the native cluster module as it requires little extra work in a single application - add a few lines of code, and you’re done.

When you are managing a fleet of applications, however, it can quickly become tedious. If you're interested in a low-code model and require additional production support, PM2 is likely the better option.

Running across Multiple Machines with Network Load Balancing

The previous two methods allow you to horizontally scale your application in a single machine across multiple cores. While that’s a handy mechanic to implement in a single-threaded application like Node.js, it’s also possible to distribute your application instances across multiple machines.

This approach is comparable to how the cluster module directs traffic to the child worker process. However, in this case, you’re distributing the application traffic across multiple servers that run the same instance of your application.

To scale across multiple machines, you need a “load balancer”. A load balancer distributes the workload among available worker nodes (Figure 7), identifying the server with the least workload (traffic) or the quickest response time.

Fig. 6: A load balancer distributes requests among servers.

Fig. 6: A load balancer distributes requests among servers.

A load balancer serves as your "traffic cop" in front of your servers, distributing client requests across all servers capable of handling them. This will optimize load times and create greater resilience.

A load balancer achieves this by ensuring no server is overwhelmed, as the excess load can cause performance degradation. If a server goes offline or crashes, the load balancer redirects traffic to active and healthy servers. And, if a new server is added, the load balancer forwards requests to the new server.

NGINX is a widely-used open-source web server that can also be used for various use cases like load balancing, reverse proxying, caching, etc. NGINX's customizable features, including session persistence, SSL termination, in-built health checks, and TCP, HTTP, and HTTPS server configuration, make it a highly efficient tool for managing and scaling web traffic across multiple servers

Containerization to the Rescue

An application container is a lightweight, standalone image that contains code and all its dependencies so it can run quickly and reliably in different computing environments. The container image includes everything needed to run an application: code, runtime, system tools, system libraries, and settings.

As an alternative to containers, you can deploy a Node.js application to a virtual machine (VM) on some host system once it's ready for production. The VM must, however, be powered up by several layers of hardware and software, as shown in Figure 7.

Fig. 7: Infrastructure for virtual machines.

Fig. 7: Infrastructure for virtual machines.

The VM running the application requires a guest OS. On top of that, you add some binaries and libraries to support your application (Figure 8).

Fig. 8: Infrastructure and components of the virtual machine.

Fig. 8: Infrastructure and components of the virtual machine.

Once in production, you need to ensure the scalability of this application. Figure 10 shows how this can be done using two additional VMs.

Fig. 9: Three virtual machines running on one physical computer.

Fig. 9: Three virtual machines running on one physical computer.

Even though your application might be lightweight, to create additional VMs, you have to deploy that guest OS, binaries, and libraries for each application instance. Assuming that these three VMs consume all of the resources for this particular hardware and assuming that the application uses other software - such as MySQL for database management - the architecture becomes difficult to manage.

Each software component hosts its own dependencies and libraries. Some applications need specific versions of libraries. This means that even if you have a MySQL server running on your system, you must ensure you have the specific version that the Node.js application needs.

With such a huge number of dependencies to manage, you will end up in a dependency matrix hell, unable to easily upgrade or maintain the software.

Assuming you’ve developed the application on Windows, deploying it to a Linux system will likely introduce incompatibilities. Sharing the same copy of the application to different hosts can be challenging, as each host has to be configured with all the libraries and dependencies and ensure correct versioning across the board.

Virtual machines are great for running applications that need OS-level features. However, deploying multiple instances of a single application that has a lightweight system can take a lot of work to manage. As you can see, maintaining your single Node.js application in such environments can be complex.

Containerization can be an excellent solution to these kinds of problems. With containerization, package your applications and run them in isolated environments. You can run powerful applications quickly even if those applications need different computing environments.

Containerization provides a standardized, lightweight method to deploy applications to various environments. Containers make it easier to build, ship, deploy, and scale applications .

Figure 10 depicts how different Node.js instances can run within a containerized environment.

Fig. 10: Three containers running on a single physical computer.

Fig. 10: Three containers running on a single physical computer.

With containers, you don’t need a guest OS to run your application, as the container shares the host’s kernel. Resources are shared within the container, and your application consumes fewer resources. If some container process isn't utilizing the CPU or memory, those shared resources become accessible to the other containers running within that hardware. In short, a container uses only the resources it needs.

For dependencies - such as a MySQL server - you need only one container to run the service. By using containers, you also increase the portability and compatibility of your application, meaning it doesn't matter whether it's running on an Ubuntu server with 20 cores or an Alpine server with 4. The container will contain everything your application needs all you should do is ensure that the host system supports container runtimes (eg: Docker, Runc, containerd etc).

In short, containers allow you to

- Have consistent environments, letting you choose the languages and dependencies you want for your project without worrying about system conflicts

- Scale more easily

- Isolate processes making troubleshooting easier

- “Build once, deploy anywhere,” allowing you to package and share your code with other teams and environments

- Have strong support for DevOps and continuous integration/continuous delivery (CI/CD)

- Support automation, improving developer experience

- Using Cron jobs to periodically scale containers can help free up the SRE to focus on other aspects. Nevertheless, modern orchestration systems like Kubernetes provide more efficient tools such as Horizontal Pod Autoscaler (HPA) and Cluster Autoscaler that can automate container scaling based on application metrics

The top tools for container management are

- Docker

- AWS Fargate

- Google Kubernetes Engine

- AWS elastic container service

- Linux containers (LXC)

The Principles of Scaling Node.js

In this article, we have seen that scaling an application can be achieved in several ways. To scale a single instance Node.js application running on a single computer, follow three main principles: cloning, decomposition, and data sharding. The previous examples explored in this article fall under the “cloning” principle.

Cloning

Cloning (also known as forking) duplicates a Node.js application and runs multiple instances of the same application, splitting traffic between those instances. Each instance is assigned part of the workload.

There are multiple ways of dividing this workload. Two of the most common approaches are implementing round-robin scheduling, where requests are spread equally over the available instances. Or, you can configure your load balancer to always send a request to the instance with the lowest load.

Cloning goes hand-in-hand with using a Node.js cluster module. The load balancer provides efficient performance when you clone your application and distribute the traffic to multiple instances of your application, ensuring that the workload is shared.

Decomposition

A monolithic application can be highly complex to manage. If a monolithic application is decomposed, each service can be handled by an independent microservice.

A good example is a Node.js application providing a database and a front-end user interface. The database, front-end, and back-end can be split into microservices, letting you run each service independently.

Currently, the best model for decomposition is containerization. In this model, you decouple your app into multiple microservices and put each microservice in its container. While each container runs a single service, there’s a possibility for high cohesion between the services.

Data sharding

Node.js scalability and availability depends heavily on the data capabilities of your application. Splitting data into meaningful subsets - shards - allows you to partition your disk space. This way, you split your application into instances that run across different machines or data centers.

Assuming your existing database can’t handle the amount of requested data, you can split your database into several instances, each responsible for only a part of the whole data set. This kind of data distribution is also called horizontal partitioning.

While sharding doesn’t occur inside your application but rather in your database, scaling out your application should be the primary objective of the shard. Deploying extra machines to an existing stack will split the workload, improving traffic flow and enabling faster processing.

When Should You Scale Your Node.js Application?

It’s always important to monitor your application and notice when it’s running in an environment that might create a high workload. You can then use the gathered metrics to determine whether you need to change your scaling strategy.

Scenarios where Sharding and horizontal database partitioning can be effective:

- The application starts to overflow the disk or heavy memory space

- The application performs too many write operations for one server

- Need to distribute the load across multiple servers to improve speed and performance

- Need to improve the reliability and availability of the database by replicating data across multiple nodes or clusters

- Need to Optimize the database for read-heavy or write-heavy workloads

Here are some common scenarios you need to consider when changing your scaling strategy.

CPU & RAM Usage

CPU and RAM usage can be some clear indicators that your application is utilizing the maximum available memory and cores in your machine. When this occurs, users may experience delays due to excessive load, resulting in requests taking more time than necessary. At this point, you should consider scaling strategies that fit your application's ability to scale.

It's advisable to keep an eye on your application's CPU and memory usage, which can be accomplished with one of the following tools:

- HTOP for Unix systems

- Process Explorer for Windows-based systems

- The Node.js native OS module

These tools let you check the load average for each core in real time. You can monitor usage, get alerted when there are signs of incoming issues, and intervene before things get out of hand.

High Latency

While high CPU and RAM usage may be common reasons for high latency, they aren’t the only possible causes. Therefore, it’s vital to monitor high latency individually.

An optimal application load time should not exceed 1-2 seconds. Any delay beyond this threshold runs the risk of users losing interest, resulting in a staggering 87% user abandonment rate. Furthermore, statistics indicate that around 50% of users abandon applications that take more than 3 seconds to load. Slow website load times also have a detrimental effect on Google ranking index factor.

Applications are expected to run as fast as possible, as it increases user satisfaction and Google ranking. Test your application response time routinely, rather than wait for user complaints.

It’s important to keep track of failed requests and the percentage of long-running requests. Any sign of high latency sends a warning that scaling is necessary.

Too Many WebSockets

WebSockets are used for efficient server communication over a two-way, persistent channel, and are particularly popular in real-time Node.js applications, as the client and server can communicate data with low latency.

WebSockets take advantage of Node.js's single-threaded event loop environment. Additionally, its asynchronous request processing architecture facilitates non-blocking I/O that executes requests without blocking. But Node.js can create enough concurrent executions that the number of socket channels grows beyond the capacity of a single node server.

Therefore, when using WebSockets with Node.js, issue io.sockets.clients() to get the number of the connected clients at any time and feed results to tracking and logging systems. From here, you can scale your application to match your node’s capacity.

Too Many File Descriptors

A file descriptor is a non-negative integer used to identify an open file. Each application process records its own descriptors, and any time a new file is opened, the descriptors record an entry.

Each process is allowed a maximum number of file descriptors at any given time, making it possible to receive “Too many open files” errors. Horizontal scaling is likely to be an optimal solution in this case.

Event Loop Blockers

Event loop is a Node.js mechanism that handles events efficiently in a continuous loop, as it allows Node.js to perform non-blocking I/O operations. Figure 12 offers a simplified overview of the Node.js event loop based on the order of execution, where each process is referred to as a phase of the event loop.

Fig. 11: Phases of a typical event loop in Node.js.

Fig. 11: Phases of a typical event loop in Node.js.

Event loop utilization refers to the ratio between the amount of time the event loop is active in the event provider and the overall duration of its execution.

An event loop processes incoming requests quickly and each phase has a callback queue pointing to all the callbacks that must be handled during the given phase. All events are executed sequentially in the order they were received with the event loop continuing until either the queue is empty or the callback limit is exceeded. Then, the event loop progresses to the next phase.

If you execute a CPU-intensive callback without releasing the event loop, all other callbacks will be blocked until the event loop is free. This roadblock is referred to as an event loop blocker. Since no incoming callback is executed until the CPU-intensive operation completes, there are huge performance implications. The solution is to mitigate the delay caused by the blockers.

You can use Nginx Event Loop to successfully process millions of concurrent requests and scale your Node.js app. This way, you can detect whether the event loop is blocked longer than the expected threshold.

Best Practices for Efficient Node.js Performance

You'll likely run into scaling issues as your business grows. Scaling is a continuous process so knowing when to scale your application is essential.

Besides the points mentioned above, there are other additional practices that you can consider to make your Node.js run as efficiently as possible. Some examples are:

- Serving Node.js static assets with Nginx

- Using caching strategies such as Redis to reduce application lookups

- Implementing database query optimization to ensure database queries are as efficient as possible, not adding too much server latency

- Tracking down memory leaks to ensure running services don’t hold on to memory unnecessarily. A garbage collector allows you to analyze memory leaks

- Real-time monitoring and logging to analyze and get real-time metrics on your Node.js application's performance

- Optimize your Node.js API for data processing by implementing pagination. Build your Node.js application with the optimal server strategy, such as integrating Node.js with gRPC to resolve requests from a gateway to a server. Reduce server responses and network traffic with GraphQL

Conclusion

This article has covered a few potential approaches to scaling Node.js. Before choosing which optimization technique to use, evaluate each strategy against your specific application and infrastructure.