A Comprehensive Guide to Memory Leaks

Memory has become cheaper and more available over the years, but that doesn’t mean developers can afford to be careless with it. Efficient memory use still matters if you are prioritizing performance, reliability, scalability, and cost. A memory-hungry application may work fine during development or small-scale testing, only to fail under real-world loads or in production environments where uptime and resource constraints matter.

One common misconception is that memory leaks are only a problem in low-level languages like C or C++. But that’s just not true. Even in high-level languages with automatic garbage collection, like Java, Python, or JavaScript, memory leaks can and do happen. They just show up in different ways and can cause different kinds of problems.

This guide goes over what memory leaks are, how they happen in different environments, what signs to look out for, and how to debug and fix them. Let’s get started!

What Are Memory Leaks?

A memory leak happens when a program holds on to memory it no longer needs and never releases it. Over time, these unused memory blocks pile up, which can slow down the system, and eventually cause the app or system to crash.

Memory leaks are a type of resource leak, which is a broader term that refers to any situation where a program does not properly free system resources such as memory, file handles, database connections, threads, or network sockets.

Back in the early days of programming, developers had to manage memory manually. In languages like C, you had to explicitly allocate memory with functions like malloc() and free it with free(). Forgetting to free memory was a common cause of memory leaks, and debugging such issues was painful.

Today, many languages come with automatic memory management through garbage collectors. These systems are designed to track which parts of memory are still in use and clean up the rest. This has made life easier, but not leak-proof.

If your code keeps references to unused objects, or holds on to listeners, caches, or closures longer than needed, the garbage collector won’t clean them up. That’s how memory leaks still happen in modern apps.

What are the causes of memory leaks?

Before going into the specific causes, it helps to understand how memory is managed in most programming environments.

In most systems, memory is divided into two main areas: the stack and the heap. The stack is used for storing variables with short lifetimes, like function arguments and local variables. It follows a strict order (last in, first out) and memory is automatically cleaned up when a function exits.

The heap, on the other hand, is used for dynamically allocated memory. This is where objects, arrays, and other data structures live if they need to stick around beyond a single function call.

In high-level languages like JavaScript or Python, the garbage collector (GC) watches the heap and automatically frees memory that is no longer in use. But the GC only cleans up memory that’s unreachable. If something is still referenced, even if it’s no longer needed, the GC won’t touch it. That’s where memory leaks creep in.

With that understood, let’s look at some of the most common causes of memory leaks and how they happen in real-world code.

Unreleased References

This happens when your code holds onto memory that it no longer needs. Maybe it's a large array, a file handle, an object, or some temporary data you forgot to clear.

Example (JavaScript):

let cache = {};

function loadData(key, value) {

cache[key] = value; // never cleared

}

If this cache keeps growing and old entries are never removed, memory usage will keep going up.

Event Listeners and Callbacks

If you attach event listeners to DOM elements or system resources and don’t remove them when they’re no longer needed, it can lead to leaks, especially if the element gets removed from the page but the listener stays in memory.

Example (JavaScript):

const button = document.getElementById('submit');

button.addEventListener('click', handleClick);

// Later, the button is removed but listener stays attached

The listener keeps a reference to the function and possibly the element, which prevents both from being garbage collected.

Circular References

This is when two or more objects reference each other in a way that forms a loop. In some older garbage collectors, this would cause leaks because reference counting couldn’t detect the cycle.

Example (pseudo-code):

function createCycle() {

let objA = {};

let objB = {};

objA.ref = objB;

objB.ref = objA;

}

Modern GCs can usually handle this, but in some cases (especially involving DOM nodes and closures), cycles can still cause problems.

Unmanaged Resources

If your code uses system resources like file handles or database connections, and you forget to close or release them, it’s a form of memory/resource leak.

Example (Python):

def read_file():

f = open('data.txt')

data = f.read()

# forgot to call f.close()

Even though the memory used here might be small, the number of open files can grow quickly.

Global Variables

Global variables are another common source of memory leaks. Since they stay alive for the entire lifetime of the app, any data stored in them will also stay in memory unless explicitly cleared.

Example (JavaScript):

var bigData = new Array(1000000).fill('data');

// bigData stays in memory until the page is refreshed or manually cleared

In large apps or long-running processes, uncontrolled use of global variables can slowly bloat memory usage and make leaks harder to track down.

Why Is It Important to Resolve Memory Leaks?

Here are the key reasons why modern apps can’t afford to have memory leaks:

- They can cause applications to slow down over time, especially if they run continuously or handle a high volume of traffic.

- They make resource usage unpredictable, which can lead to performance bottlenecks that are hard to diagnose.

- In environments with limited memory (e.g., mobile apps, embedded systems), leaks can cause crashes or app shutdowns.

- They increase infrastructure costs in cloud-based systems, where memory usage is tied directly to pricing.

- Leaks can make debugging harder, as memory-related bugs often appear much later than the code that caused them.

- In multi-user systems, a single leak can affect all users by exhausting shared memory.

Symptoms of Memory Leaks

Next, let’s explore some common symptoms of memory leaks and how you can spot them:

Gradual Performance Degradation

Memory leaks often don’t cause immediate problems. Instead, the application becomes slower over time as more memory is used but never released.

You’ll notice:

- Pages or screens take an increasing amount of time to load the longer the app runs

- Interactions that were once instant become sluggish

- High CPU usage as the system struggles to manage memory pressure

Out-of-Memory Errors and Crashes

If leaked memory adds up over time, the app may eventually hit system memory limits and crash or get killed by the OS.

You’ll notice:

- Frequent "Out of Memory" errors in logs or crash reports

- Apps being force-closed on mobile devices without warning

- Backend services restarting unexpectedly under load

Inconsistent Behavior After Repeated Use

Some leaks only show up after users perform certain actions many times, like opening and closing a modal or switching between views.

You’ll notice:

- The app feels fine at first but gets worse with repeated actions

- Features that worked earlier in a session start to misbehave

- A restart temporarily fixes the issue

Increased Garbage Collection Activity

In garbage-collected languages, memory leaks can cause the GC to run more often as it tries to free up space, even if it doesn’t succeed.

You’ll notice:

- More frequent or longer GC pauses in performance logs or monitoring dashboards

- Noticeable lag spikes even when the app isn’t doing much

- Higher CPU usage with little improvement in memory use

Failure to Scale Under Load

Memory leaks can limit how well your app scales, especially in environments where many users or requests are served in parallel.

You’ll notice:

- Sharp performance drop when user count or traffic increases

- Memory spikes under high load even with proper scaling infrastructure

- New sessions being rejected or delayed due to exhausted resources

Unreleased External Resources

Memory leaks often go hand in hand with other resource leaks, especially when external objects like file handles or database connections aren't released.

You’ll notice:

- Too many open files or sockets in system-level diagnostics

- Connection pool exhaustion in databases or APIs

- Services locking up due to hanging threads or blocked operations

How to Debug and Resolve Memory Leaks

Now that you know how important memory leaks are, and how they manifest, you are ready to start resolving them. Here’s a step-by-step approach that works across most languages and platforms.

1. Pick the Right Tool for Your Language

The first step is to choose a memory profiling or analysis tool that fits your environment. Each language has its own ecosystem of tools designed to detect memory usage patterns and leaks.

C/C++:

- Valgrind (memcheck): Tracks memory allocation and highlights leaks, invalid accesses, and unfreed blocks.

- AddressSanitizer: Faster than Valgrind, built into many compilers.

Java:

- VisualVM: Built-in profiler for analyzing heap, threads, and memory usage.

- Eclipse MAT (Memory Analyzer Tool): Helps analyze heap dumps and detect memory leaks.

Python:

- objgraph: Visualizes object references and helps detect leaks in memory-heavy Python apps.

- tracemalloc: Built-in module in Python 3 for tracking memory allocations.

JavaScript:

- Chrome DevTools: Provides a heap snapshot tool to inspect object references and memory growth over time.

- Firefox Developer Tools: Similar memory analysis capabilities with cycle detection.

Node.js:

- clinic.js, heapdump, and memwatch-next: Tools for tracking and analyzing memory usage and leaks in server-side JS apps.

2. Set Up and Configure the Tool

Once you’ve selected a tool, the next step is to configure your app or environment to work with it. For example, you may:

- Run your app with debugging flags (valgrind ./app, --inspect for Node.js, etc.)

- Capture a heap snapshot at regular intervals

- Enable detailed logging for allocations and deallocations

- Reproduce a leak-prone scenario (e.g., simulate user sessions or data processing loops)

Some tools may require you to compile with debugging symbols or run in a special mode to get accurate results.

3. Reproduce the Issue Under Observation

Leaks often show up only after certain actions are repeated or the app has been running for a while. Trigger the suspected behavior while monitoring memory usage:

- Open and close modals repeatedly

- Submit large datasets

- Switch views multiple times

- Simulate long-running sessions

This helps surface leaks that only appear after repeated usage or under load.

4. Analyze the Output

Once the data is collected, look for signs of memory that was allocated but never released:

- In Valgrind, check for “definitely lost” blocks and their origin points

- In Chrome DevTools, compare heap snapshots and look for growing object counts

- In VisualVM, track classes with growing instance counts or retained sizes

- In Python’s tracemalloc, compare snapshots to see where memory usage increases the most

Focus on objects that grow over time or are still in memory long after they should be gone.

5. Identify the Root Cause

Use the stack traces or reference chains provided by the tool to pinpoint where objects are being held unnecessarily. For example, if you’re using Valgrind and you see output like this:

==1234== 20 bytes in 1 blocks are definitely lost in loss record 1 of 1

==1234== at 0x4C2FB55: malloc (vg_replace_malloc.c:299)

==1234== by 0x4005B3: allocate_buffer (main.c:12)

==1234== by 0x4005D1: main (main.c:25)

It means 20 bytes were allocated in allocate_buffer() at line 12 of main.c and never freed. Looking at the code:

char* allocate_buffer() {

char* buffer = (char*)malloc(20);

// forgot to free or return it

return buffer;

}

int main() {

allocate_buffer();

return 0;

}

You can tell that the memory allocated inside allocate_buffer() is never freed, and the pointer is not stored or used later. Since nothing frees that memory, Valgrind flags it as definitely lost, a clear sign of a memory leak at this point in the code.

6. Fix the Code

Once you’ve identified the leak, apply the fix:

- Remove or null out unnecessary references

- Unregister event listeners

- Break circular references manually

- Use weak references where supported (WeakMap, WeakRef, etc.)

- Close files, sockets, or DB connections when done

- Limit cache size or expiration manually

- In C/C++, make sure every malloc() or new is matched with a free() or delete.

In our previous example, the fix would be to make sure the memory allocated inside allocate_buffer() is either freed or properly returned and later freed by the caller. Here’s a corrected version of the code:

char* allocate_buffer() {

char* buffer = (char*)malloc(20);

return buffer;

}

int main() {

char* data = allocate_buffer();

// use the buffer...

free(data); // properly free the memory

return 0;

}

This way, the memory is explicitly released when it's no longer needed, and Valgrind will no longer report it as leaked.

7. Re-test and Validate

After fixing the leak, run the app again under the same conditions and compare memory usage:

- Does memory stabilize over time?

- Are heap snapshots smaller or flat?

- Are GC logs less noisy?

- Do previously failing sessions now run clean?

Best Practices to Avoid Memory Leaks

Finally, here are some best practices that will help you avoid most memory leaks:

- Always release memory or resources when you're done using them, whether it's manual (like free() in C) or through cleanup logic in higher-level languages.

- Keep your functions and objects short-lived unless there's a clear reason they need to persist.

- Unregister event listeners, timers, observers, and other callbacks when the associated component or object is destroyed.

- Avoid unnecessary global variables or long-lived singletons that can hold onto memory.

- Use weak references where available for caches or other non-critical references.

- Limit the size and lifetime of in-memory caches or buffers.

- Monitor memory usage over time, especially in long-running or high-load applications.

- Write unit or integration tests that simulate long sessions or repeated user interactions to catch early signs of leaks.

- Use memory profiling tools regularly, not just when things break.

- Prefer immutable data structures where possible to reduce unintended memory retention.

- Avoid creating deep object graphs unless necessary, as they can make leaks harder to detect.

- In UI frameworks, make sure components clean up after themselves during unmount or teardown.

- Periodically audit long-running services or daemons for memory usage trends and retention patterns.

- Set sensible defaults and limits for background tasks, queues, or worker pools to prevent unbounded memory growth.

- In multithreaded environments, avoid shared memory patterns that rely on manual cleanup unless you're fully controlling object lifetimes.

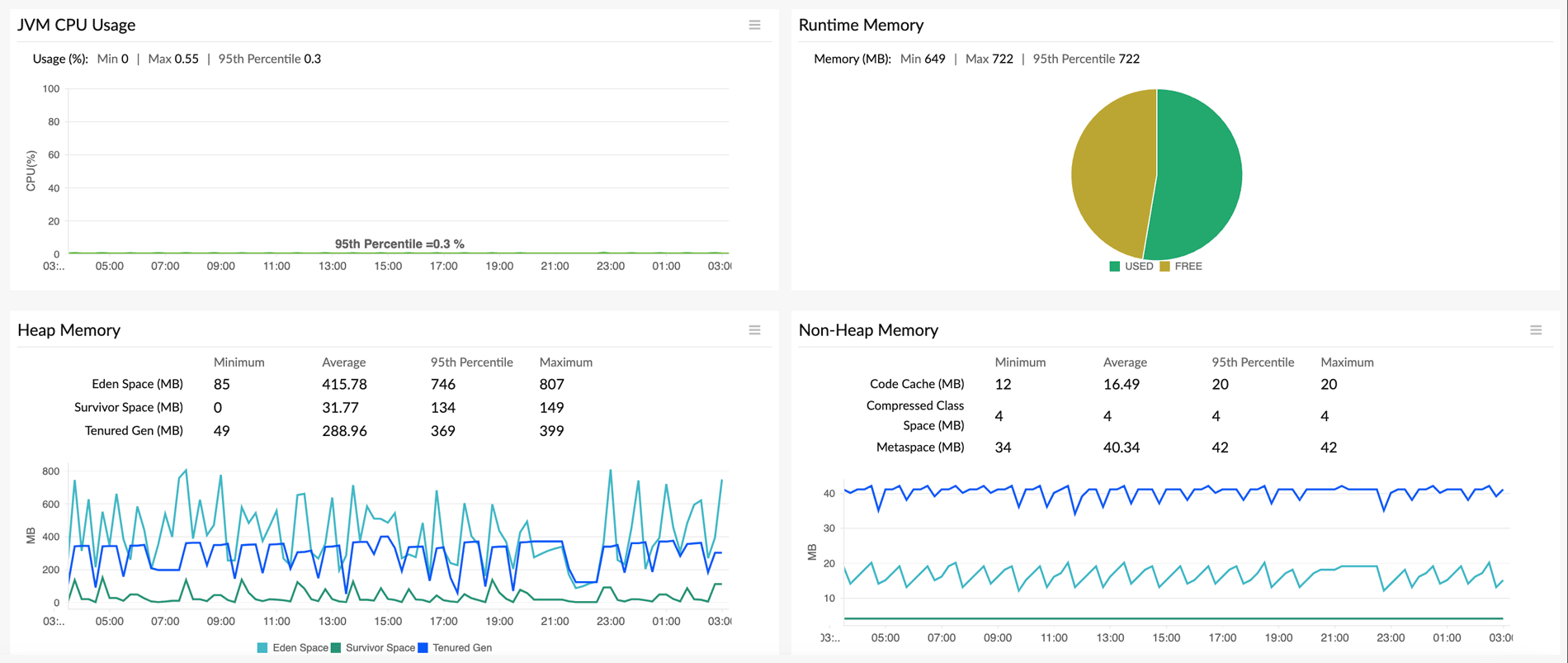

- Use a dedicated tool like Site24x7 to monitor your memory utilization over time using key metrics like heap usage, garbage collection activity, memory allocation rates, and process-level memory consumption.

Monitoring and Observability for Memory Leak Prevention

Memory leaks represent a critical class of performance issues that can silently degrade application performance, increase infrastructure costs, and ultimately impact user experience. Whether you're developing in C++, Java, Python, Node.js, or any other language, understanding the mechanisms behind memory leaks—from unreleased references and event listeners to circular dependencies and unmanaged resources—is essential for building stable, scalable applications.

The debugging process we've outlined—choosing appropriate profiling tools, reproducing issues systematically, analyzing output carefully, and validating fixes—provides a structured methodology that applies across different technology stacks. By combining proactive best practices with reactive debugging techniques, you can significantly reduce the occurrence and severity of memory-related issues in your applications.

However, manual debugging and isolated testing can only catch leaks during development. In production environments, continuous monitoring becomes essential. This is where Application Performance Monitoring (APM) and comprehensive observability solutions play a critical role. Real-time monitoring of memory consumption patterns, garbage collection behavior, heap utilization trends, and process-level metrics enables you to:

- Detect anomalies early: Identify gradual memory growth before it becomes critical through continuous baseline tracking

- Correlate with user impact: Connect memory issues with actual performance degradation and user-facing symptoms through real user monitoring (RUM)

- Enable faster resolution: Reduce mean time to resolution (MTTR) with detailed historical data and contextual insights

- Optimize infrastructure costs: Right-size your cloud resources by understanding actual memory utilization patterns

Site24x7's application performance monitoring platform provides end-to-end visibility into memory behavior across your entire stack, with dedicated language-specific solutions Site24x7's full-stack observability approach goes beyond memory metrics alone, integrating log management to correlate memory metrics with application logs for tracing leak root causes, distributed tracing to understand which transactions and code paths consume the most memory, and real user monitoring to see how memory issues manifest as latency spikes, slowdowns, or errors for actual users. By combining the debugging and prevention techniques outlined in this guide with continuous monitoring through APM, you can build applications that maintain stable memory usage throughout their lifecycle, even under production load and unexpected usage patterns.